Nombre de resultats 10

per a data

08/07/2019 - 2019#19 Readings of the week

NOTE: The themes are varied, and some links below are affiliate links. History, haskell. Expect a similar wide range in the future as well. You can check all my weekly readings by checking the tag here. You can also get these as a weekly newsletter by subscribing here.

|

| Photo by Chris Marquardt on Unsplash |

When Pepsi Had a Navy

From the title, I thought it was related to the “Sugar Wars”, but the reality is weirder.Your Work Peak Is Earlier Than You Think

Mildly depressing. A quote I found interesting:Careers that rely primarily on fluid intelligence tend to peak early, while those that use more crystallized intelligence peak later. For example, Dean Keith Simonton has found that poets—highly fluid in their creativity—tend to have produced half their lifetime creative output by age 40 or so. Historians—who rely on a crystallized stock of knowledge—don’t reach this milestone until about 60.

Why I (as of June 22 2019) think Haskell is the best general purpose language (as of June 22 2019)

Although I can somewhat read Haskell code, I still can’t write much. So, can’t really agree... Yet.Easy Parsing with Parser Combinators

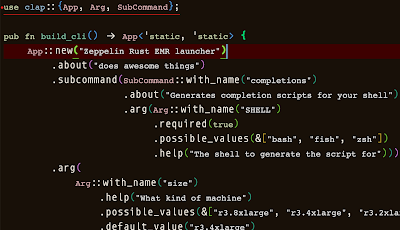

I have a small project I wrote with AWK that I want to rewrite “properly” in Scala, and I’d need to do some real parsing (not the ad-hoc parsing you always end up writing in AWK), so learning to use FastParse is worth it. Also Li is a very clear writer.Sam and Max Hit the Road Development History

So many fond memories from this game, I’m getting it again from GOG any day soon. Also this post made me look up where my copy of the ??? was.Comonads for Life

Implementing the Game of Life using comonads (the dual of monads)Real-world dynamic programming: seam carving

I have always been fascinated by seam carving. It’s so amazingThe surprising story of the Basque language

Uhm, Armenia is not even close.History Will Not Be Kind to Jony Ive

Flexgate, antennagate, keyboardgate. There have been many f-ups that can be traced back to looking for a specific design. I hope Apple gets back to top-design and top-usability now.'It's getting warmer, wetter, wilder': the Arctic town heating faster than anywhere

Longyearbyen (Svalbard’s capital) is in danger.Reasons of State: Why Didn't Denmark Sell Greenland?

An analysis by Gwern Branwen about why Denmark didn’t sell Greenland to the US after WWII (they wanted it as a base).'Like a military operation': restoration of Rembrandt's Night Watch begins

The weight of the painting will surprise you.📚 Sprint

The nitty gritty details of how to run design sprints (a Google practice). Interesting.📚 Diaspora

This is the second Egan book I read (after Clockwork Rocket) and I found Diaspora much better, although with a weak ending and a somewhat erratic plot. The book has some strong Star maker vibes. By the way, when adding the link above I thought the narrator for Audible was this Adam Epstein (a pretty darn good mathematician I know)📚 Thinking in bets

The general idea is sound, but it’s one of those books that could be written as a long blog post or short essay.📚 Set your voice free

Exercises to improve your voice. I have recently started to do the daily warm-ups, but so far have felt no difference. Of course, other people should be the ones to tell me.🎥 Wardley mapping interview

I was recently interviewed by John Grant and Ben Mosior as part of a set of interviews leading up to MapCamp London 2019. If you are interested in my thoughts about mapping the tech landscape, I wrote my train of thought in this blog post as well.Newsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.07/07/2019 - A (section) of a map of the data engineering space

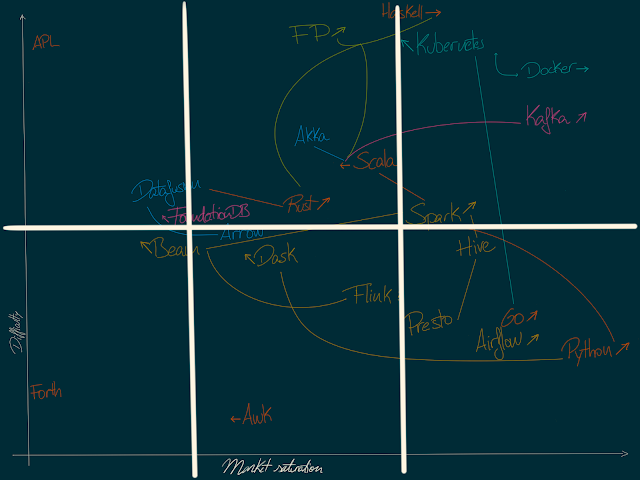

The map and problem described here were part of my presentation Mapping as a tool for thought, and mentioned in my interview with John Grant and Ben Mosior (to appear sometime soon in the Wardley Maps community youtube channel). I’m looking for ideas on how to make this map easier to understand and useful, so I posted it to the Wardley Maps Community forums requesting comments.

Problem I'm trying to solve

As a consultant (and as someone always trying to keep up with technology) I'm interested in being able to answer three questions of a language or technology:- How easy it is to find work/workers in the area right now?

- How hard it is to learn?

- How easy is it going to be to find work/workers in the area once I'm proficient enough?

This problem has been on the back of my mind for many years, and upon getting proficient with Wardley mapping, I thought I could just map it. Of course, it's not a Wardley map, because the axes are completely different, but having anchors and movement, it is spiritually close enough for me.

In the diagram I will show in a while, I have placed technologies I am proficient in, currently learning, or looking forward to learn. In all of them I am at least a beginner in the sense that I know what they are used for and have done some minor PoC (proof of concept) to get an idea of how the work.

Looking for axis metrics

There are several ways you can address this technology space to answer the questions above. The first and easiest metric, and one of the axes I have used (Y axis) is Difficulty. Since I know something about each technology I can rank them on Difficulty, at least in relationship with each other. It's only a qualitative metric of difficulty, because in any new technology there are always unknown unknowns. There is no movement assumed in this axis, because Difficulty is supposed to be consistent throughout (leaving aside the more you know the easier it is to learn as well as familiarity with similar concepts that offset that, you could think of these two concepts as doctrine in such a map).One natural metric for the other axis could be popularity, as measured by any of the several programming language/framework popularity rankings. You can use popularity as one of the axes, and use arrows to indicate whether it is growing in popularity or diminishing in popularity. But, popularity alone does not help in answering questions 1 and 3. What we need is knowing how large the market for this technology is, and how large the pool of workers in this market is. Could we use either as an axis?

If we were to use market size as X axis, we would probably have large markets on the right and small markets on the left, we would likely use arrows to indicate growing markets and shrinking markets. But, market size alone won't answer the questions either. A small thought experiment: imagine we have the largest market possible, it is growing... but the pool of workers for that technology is 2x the size of the market. It would be impossible to find work there (but, would be easy to find workers). This suggests that a possible correct for the X axis is market saturation, i.e. the ratio of market size with worker pool. Highly saturated markets are uninteresting to look for work, but are very interesting if you are starting a company: you'd have an easy time finding hires for that technology. Market saturation is related to flows (as in Systems thinking flow analysis) of users into a variable-sized container.

Markets become saturated in one of 3 ways:

- Market is growing, but the pool of workers grows faster

- Market is stagnating with a growing pool of workers

- Market is shrinking faster than the pool of workers is shrinking

Markets become desaturated in one of 3 ways as well:

- Market is growing faster than the pool of workers is growing

- Market is stagnating with a shrinking pool of workers

- Market is shrinking slower than the pool of workers is shrinking

We can represent all these with slanted arrows: slant up covers growing markets, slant down covers shrinking markets. And then the arrow points left or right whether it is becoming saturated or desaturated.

With market saturation as an axis and arrows to indicate evolution of a technology, we can now almost answer questions 1 and 3. There is still the question of market size, which can't be represented with such a relative measure. Although we could add circles to represent current market size, that would bring an already weird map to more weirdness. Hence, market size is not considered.

The map

Here you can see the map. Before I get a bit into the topography of it, let me quickly define some of the technologies:

- APL: programming language based on non-ASCII symbols designed in the 70s. Not extensively used, but in use

- Airflow: workflow scheduler for data operations

- Akka: Scala/JVM actor framework used for reactive programming, clustering, stream processing, etc

- Arrow: cross-language platform for in-memory data. Used in Spark, Pandas, etc

- Awk: special-purpose programming language designed for text processing

- Beam: unified model for data processing pipelines. Can use Spark, Flink and others as execution engines

- Dask: cluster-capable, library for parallel computation in Python.

- Datafusion: rust-based, Arrow-powered in-memory data analytics

- Docker: containerisation solution

- FP: as in Functional Programming. Software development paradigm based on immutable state, among other things. Scala and Haskell are some the most mainstream languages for it

- Flink: cluster computing framework for big data, stream focused

- Forth: stack based, low level programming language. Not in common use.

- FoundationDB: multi-model distributed NoSQL database, offering "build your own abstraction" capabilities

- Go: statically typed, compiled programming language

- Haskell: statically typed, purely functional programming language

- Hive: data warehousing project over Hadoop, roughly based in "tables"

- Kafka: cluster based stream processing platform (often used as a message bus) written in Scala

- Kubernetes: container orchestration system for managing application deployment and scaling. Written in Go, depending (non-strictly) on Docker

- Presto: distributed SQL engine for big data

- Python: interpreted high level programming language, very extended in data science and engineering

- Rust: memory safe, concurrency safe programming language. Has some functional capabilities

- Scala: JVM based language offering strong typing and functional and OOP capabilities

- Spark: cluster computing framework for big data, batch and stream (stronger in batch)

These cover a range of the data engineering space (Flink, Spark), as well as technologies I want to get better at and are close enough (Kubernetes, FoundationDB) and technologies I know but are not directly related (AWK, APL, Forth) and are used as anchors.

Anchors

To display relative positions, I needed to anchor some of the technologies. For instance, Haskell and APL set the bar for difficulty, with AWK setting the minimum, and Python and Forth set the extremes for saturation. Everything else is placed in relation with these.Links and colours

In the map, I have used colours to distinguish languages, frameworks, libraries, containerisation and databases. Colour is not fundamental though, links are. Related technologies are linked: Spark is written in Scala, and can be used with Scala, Python and other languages. Hence, changes in market saturation for Spark indirectly affect market saturation for Scala.Empirical map division

We can think of the map as divided in 2 areas vertically (high barrier to entry and low barrier to entry) and 3 areas horizontally (saturated, accessible, desaturated).Quick overview

If you were a CTO, you'd probably be interested in:- Low barrier to entry, saturated market for high turnover positions (easier and cheaper to hire and train)

- Accessible currently becoming saturated for more stable positions (becoming easier to hire in the future)

- High barrier to entry, with growing markets for proof of concepts, exploration (exploring new technologies that may make an impact in the future)

For deciding on future work, I'd be (as a consultant) interested in:

- First, growing market, high barrier to entry for current learning. If the barrier to entry is too low and the market is lucrative enough any return on time investment will tend to 0 in the end otherwise.

- Second, accessible with low-to-mid barrier to entry for imminent work opportunities.

- Finally, to hedge bets on a long term plan, anything which is very low saturation, and where the market is unknown or may be in growth.

Curiosities

Haskell and Rust raise interesting questions. Haskell has a very high barrier to entry, the market is not very large and might not be growing fast, but there are developers working in other languages (Scala, Rust, Kotlin, Go, even Python) that would love the opportunity to work in Haskell. This makes the Haskell job market actually saturated (or at least saturated if you consider worldwide market). Thus, starting a company focused on Haskell might not be as bad of an idea as it might sound. Similarly with Rust: Rust is growing as a side project language, the amount of developers familiar with the language is growing faster than the market and thus is an interesting target for a starting company.We'd have Python on the other side: since it is taking over as the lingua franca of data science and engineering, and becoming one of the teaching languages at universities, the amount of developers with enough knowledge to become part of the work pool is growing faster than the market (even if the market for Python is growing at a fast pace). It makes it an ideal language for creating a company or a consultancy company (large pool of candidate workers), but not so interesting for being an independent consultant, since competition could be too large

Questions and further ideas

This is the approach and train of thought I have followed to trace these ideas, but it’s still a work in progress. I’d like to hear what you have to say about this: what would you change? What would you do different? I'm still unsure about using market saturation and arrows to show market and pool of workers behaviour, but I have not found anything easier to represent. Ideas? And here are some areas I’m unsure or where I have more questions.What other approaches would you have taken to explore this questions?

There are probably many other ways you can take to approach these questions. What would be yours?Do you think market size would need to be shown?

It could be shown with a circle (different sizes to be able to compare) below the arrow indicating behaviour of saturation but that could make the map way too complex. Any other ideas? Do you think it is that important, as long as saturation is taken into account?Links between related technologies are a bit hazy

The links between technologies are a bit too abstract. An "increase" in "Python" moves "higher" Airflow, Spark, Dask and any related technologies... but in what sense? Popularity? Market share? Market saturation? I suspect the link is useful to see, and it is supposed to bring some dynamic/movement, but I'm still unsure how.Flows

An interesting approach I didn't pursue is using flow maps. For each programming language, there is a set of flows into other languages. For instance, developers in Scala have a tendency to be interested in Kotlin, Rust and Haskell, with some making the jump as soon as market is able to absorb them (and for each of these flows we can assume there is a non-zero flow to the other side). Similarly, we'd have flows from Python to Go and Scala, from Go to Rust. These could inform on market trends and behaviours, but they are not only hard to show on a map (what would be the axes? what would be the anchors?) but also might not be interesting enough on their own. What do you think?25/06/2019 - 2019#18 Readings of the week

|

| Photo by Nik Shuliahin on Unsplash |

NOTE: The themes are varied, and some links below are affiliate links. Software engineering, history, planning, data engineering. Expect a similar wide range in the future as well. You can check all my weekly readings by checking the tag here . You can also get these as a weekly newsletter by subscribing here.

Fresh look at mysterious Nasca lines in Peru

An analysis of what kind of birds they represented.4 Simple Steps To Set-up Your WLM in AWS Redshift

Good suggestions on workload management and process queues for RedshiftAdventure Games and Eigenvalues

Finding dead ends in a game using Markov processes (instead of a formal language approach)When pigs fly: optimising bytecode interpreters

Quite a meaty post about... well, optimising interpreters (incidentally, bytecode based)See through words

Did you know metaphor design is a thing? Read this article for more.Decision tables

Calling decision tables a formal method may be a stretch, but they can clarify your thinking. And that is one of the powerful things formal methods bring.Monad Transformers aren’t hard!

No, they aren't, but your heap can suffer in Scala!🎥 Wardley Maps Saved The Day - How Stack Overflow Enterprise automated all the things...

I’ve been into Wardley mapping for several months (even gave a presentation on Mapping at SoCraTes UK 2019) and I’m basically consuming any content related to it. This short video is a very good intro to Wardley mapping, by the way.🎥 Cartoons are about how drawing and writing work together on the page

A quite funny video presentation by Tom Gauld📚 The goal: a process of ongoing improvement

It’s like Sophie’s World but for Theory of Constraints (with a whiz of the Toyota Production System probably)📚 Make your contacts count

Networking tips. It describes very directed ways of building a network and actually being useful in it, not just a parasite.Newsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.10/06/2019 - 2019#16 Readings of the week

|

| Photo by Roman Mager on Unsplash |

Meet the Money Whisperer to the Super-Rich N.B.A. Elite

It surely has to be an interesting jobThought as a Technology

An essay by Michael Nielsen. Very recommended.A tale of lost WW2 uranium cubes shows why Germany’s nuclear program failed

CurioCounting to infinity at compile time – The Startup – Medium

You can do really weird things with Scala at compile time, and base 3 is pretty cool.A visual introduction to Morse theory

I was surprised to see this posted on Hacker News, so decided to read it for the "good old times". Learnt something (since I never had a class on Morse theory, it was just one of those intriguing sections in the library)The cutting-edge of cutting: How Japanese scissors have evolved

I have some weird scissors, but these go beyond weird.A different kind of string theory: Antoni Gaudi

That idea was clearly genius.Formally Specifying a Package Manager

By using Alloy. I really like Alloy.Maker's Schedule, Manager's Schedule

I was talking with a friend earlier about a previous post in my Weekly Readings series (Do I truly want to become a manager?) and he hadn't read this one, so I'll share it here too.Schema evolution in Avro, Protocol Buffers and Thrift

I kind of like the sound of how Avro handles schemas. Seems an efficient way, although seems... prone to possible disasters.Improve Apache Spark write performance on Apache Parquet formats with the EMRFS S3-optimized committer

This is optional in EMR 5.19 and will be the default in 5.20 so we won't see much difference, except in performance.Why Enthusiasm is a Bad Thing in a CTO

Hey, look a squirrel is 10x more dangerous when it's the CTO spotting squirrels.🖥 Mapping as a Tool for Thought

A presentation I gave on Wardley mapping and "mapping" in general at SoCraTesUK 2019. I really enjoy this conference.🎥 Skip the first three months of development for your next app

This ties very well with some of the Wardley mapping concepts.Newsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.26/05/2019 - 2019#14 Readings of the week

|

| Photo by Darius Soodmand on Unsplash |

Schema Management With Skeema

How SendGrid manages schema updates internally. A pity it’s focused on MySQL and family and I prefer PostgresHow to do hard things

I wasn’t aware, but this is exactly how I approach anything I don’t know how to do. Don’t miss this one.The bullshit I had to go through while organizing a software conference

I’ve been an organiser, luckily didn’t encounter this. Crossing fingers, since we are preparing stuff at PyBCN.The reason I am using Altair for most of my visualization in Python

I usually like having the possibility of maximum power and expressiveness... But eventually I just want to make easy things easy. I’ll try Altair next time I need to plot anything. Lately I’ve gone a lot to gnuplot, to be fair: nothing beats it to just plot a text file you have lying around.Introducing Argo — A Container-Native Workflow Engine for Kubernetes

This is what is now being used at BitPhy, after I recommended them to... well, not use Airflow. To be fair they were considering Argo and Airflow, and given they are heavy on Kubernetes, Argo sounds a better fit.Do I truly want to become a manager?

Six questions you should consider before thinking of making the leap out of the IC route. Thanks to CM for sharing it.Ask HN: What overlooked class of tools should a self-taught programmer look into

You can find some suggestions in these answers.Give meaning to 100 Billion events a day

How Teads leverages AWS Redshift.Performant Functional Programming to the max with ZIO

You were wondering: which ZIO post is he going to share this week?Meet Matt Calkins: Billionaire, Board Game God And Tech's Hidden Disruptor

A friend of mine is designing and producing a board game (I’ll share the Kickstarter when is ready), so this was a fun read. He’s not a billionaire though.Open-sourcing the first OpenRTB Scala framework

I’m not sure what the performance of a RTB can be in Scala, but I’m definitely interested.Zero Cost Abstractions

Rust is always sold as a zero-cost abstraction language. What does that exactly mean?A novel data-compression technique for faster computer programs

Don’t know, this sounds pretty much what Blosc does.🎥 Fast Data with Apache Ignite and Apache Spark

(this is an oldie) I tried Ignite+Spark around 1 year ago, and couldn’t get it to work properly (segmentation fault!). I’ll try again: it can open up a lot of things if it works as promised.🎥 Thinking for Programmers

Leslie Lamport selling you the why of specifications. Very recommended, specially for people who think specifying is complex, long, unnecessary or anti-agile.🎥 Live Coding with Rust and Actix

I was very impressed by this video (I watched it as “background”, not actively). Very focused, and all written in one go.🎥 Solving every-day data problems with FoundationDB

FoundationDB is on my list of technologies to watch/follow and learn. Get a glimpse about why here.📚 Soft Skills

It’s not a bad book, but is basically a summary of stuff I got from other places already. You can give it a go.🎼 Time Out by the Dave Brubeck Quartet

Saw this recommended somewhere, and I’m liking it a lot (won’t remove Bill Evans as my favourite jazz pianist though)Newsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.13/05/2019 - 2019#12 Readings of the week

|

| Photo by Zsolt Palatinus on Unsplash |

Testing Incrementally with ZIO Environment

I’ve been reading a lot about ZIO lately, even to the point of writing a few simple thinks with the help of a more advanced Scala friend. As I’ve mentioned before, it looks solidMedieval Africans Had a Unique Process for Purifying Gold With Glass

No plans on smelting anything soon, but this was interesting.Rust Runtime

The proposed approaches for async-await in Rust are shaping up.Moving from Ruby to Rust (at Deliveroo)

It should surprise no-one, except maybe hardcore rubyists that moving from Ruby to Rust yielded terrific speed-ups. Something similar should happen in Python, though.Zero-cost futures in Rust

One of the core tenets of Rust is having zero-cost abstractions (as in, being as close to manually written). Here you can read how futures fit that goal.A quick look at trait objects in Rust

Traits will be familiar to Scala or even Go or Java developers, but there are a few gotchas/differences you need to be aware.Druid Design

A friend of mine has used Apache Druid before, and has been really happy with its performance, so I’ve been looking into possible use cases to road-test it.Make your own GeoIP API (in Python)

I’ve linked before to GeoIP approaches, this one is interesting as long as you only want country-level resolution, I usually need city or better.An in-depth look at the HBase architecture

Similarly to Druid, I’ve been checking several things to speed up some areas. Apache HBase might be one of them, and I wanted to see how it works under the hood, I love these details🎥 ZIO Schedule

As mentioned above, ZIO is shaping up nicely, and the scheduling helpers presented here are one of the selling points, depending on what you do.🎥 Pure functional programming in Excel

Duh... but... to be fair... there is a point in here.🎥 Gradual typing of production applications

Incremental approach suggestions for typing in Python, at Facebook (Instagram to be precise)🎥 Effects as Data

Everything here should sound familiar if you are anything into functional programmingNewsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.19/02/2019 - Apache Hive and java.lang.ClassCastException on start

|

| Photo by Annie Spratt on Unsplash |

hive command, I got the weird-looking errorException in thread "main" java.lang.ClassCastException:

class jdk.internal.loader.ClassLoaders$AppClassLoader

cannot be cast to

class java.net.URLClassLoader

(jdk.internal.loader.ClassLoaders$AppClassLoader

and

java.net.URLClassLoader are in module java.base of loader 'bootstrap')That looked like a JVM incompatibility, so I switched from GraalVM (the one I use by default) to Java 8 (I have aliases

jgrce, jgree, j8 and j11 to switch JVMs). Still, the same error regardless. Weird. Maybe Java 11 (the other JVM I have installed)? Nope, same error. A quick Googling confirmed that this was related to Hive picking up Java 11, but only working with 7, 8 or 9 (not sure about 9). This in turn is due to the Hive boot scripts looking for the latest JRE which is at least 7, like the

hive command here:JAVA_HOME="$(/usr/libexec/java_home --version 1.7+)" \

HIVE_HOME="/usr/local/Cellar/hive/3.1.1/libexec" exec \

"/usr/local/Cellar/hive/3.1.1/libexec/bin/hive" "$@"This will pick 11, which no longer has

URLClassLoader (I think this was changed in Java 9). So, won't start. Sadly the only reasonable fix is modifying the scripts after installation, unless you want to just uninstall Java > 1.8. For me this was not an option, so I just modified the scripts by removing the

JAVA_HOME condition (since I set my JAVA_HOME globally when I switch between JVMs). And crossing fingers to remember I did so next time I upgrade HomeBrew.17/02/2019 - 2019-6 Readings of the week

NOTE: The themes are varied, and some links below are affiliate links. Softwareengineering, adtech, psychology, python. Expect a similar wide range in the future as well. You can check all my weekly readings by checking the tag here . You can also get these as a weekly newsletter by subscribing here.

Finding Lena Forsen, the Patron Saint of JPEGs

I have written about Lena Forsen (previously Soderberg) before. This is an article explaining the story behind the classic picture, the issues that have arisen with it after the years and a bit of the take of the subject, Lena.Incrementally migrating over one million lines of code from Python 2 to Python 3

Did you know, Dropbox (the client) is written in Python? Well, I did, but wasn't aware of its scale. And, now it's mostly Python 3, which is good to know. Bonus points for Dropbox for usingpytest as well.How Criteo is trying to navigate GDPR

Since I work in adtech, I need to keep up with what's up and how everything is going. This article explains a bit the impact of GDPR on Criteo, one of the largest players in programmatic advertising in Europe. By the way, Criteo have a superb engineering blog, although it can be a bit hard to read through an adblocker. By the way, the linked post is behind a paywall. Paste the URL of the link on Google and click on the first search result to be able to read it if interested.10 Reasons Why GTD Might Be Failing

The title of the linked article should end in for you. It lists the common problems a GTD newbie-intermediate can face. Been there, done that. Still am, probably.Reflecting on My Failure to Build a Billion Dollar Company

The story of GumRoad, the publishing site. A very interesting view on startups.StranglerApplication

One of Martin Fowler's refactoring approaches. I'm pretty sure you've done this, turns out it has a name.The de Havilland Comet

The story of the first passenger jet disasters, and why they happened.Simple dependent types in Python

They may be a bit too simple for what you may be looking for, but the potential is there. Also, linked to the following, which I found ideal.1-minute guide to real constants in Python

There's actually aFinal type coming, someday, to Python, which will make constants real constants. Looking forward to that.The Dunning-Kruger effect, and how to fight it

There is a quote in this interview of David Dunning which is just brilliant:The first rule of the Dunning-Kruger club is you don’t know you’re a member of the Dunning-Kruger club. People miss that.”

📚Atomic Habits

This week I have finished Atomic Habits (affiliate link). I didn't expect much, after having read so many productivity books during my life. And indeed, there was nothing new I hadn't read before, but the presentation, examples and overall feel of the book have been excellent, I have rated it 5 stars in Goodreads, which is not something I do frequently (once per year, approximately).Newsletter?

These weekly posts are also available as a newsletter. These days (since RSS went into limbo) most of my regular information comes from several newsletters I’m subscribed to, instead of me going directly to a blog. If this is also your case, subscribe by clicking here.14/01/2019 - 2019-1 Readings of the week

If you know me, you'll know I have.a very extensive reading list. I keep it in Pocket, and is part of my to do stored in Things3. It used to be very large (hovering around 230 items since August) but during Christmas it got out of control, reaching almost 300 items. That was too much, and I set myself a goal for 2019 to keep it trimmed and sweet. And indeed, since the beginning of the year I have read or canceled 171 articles (122 in the past week, 106 of which were read). That's a decently sized book!

To help me in this goal, I'll (hopefully) be writing a weekly post about what interesting stuff I have read the past week. Beware, this week may be a bit larger than usual, since I wanted to bring the numbers down as fast as possible.

NOTE: The themes are varied. Software/data engineering, drawing, writing. Expect a similar wide range in the future as well.

To help me in this goal, I'll (hopefully) be writing a weekly post about what interesting stuff I have read the past week. Beware, this week may be a bit larger than usual, since I wanted to bring the numbers down as fast as possible.

NOTE: The themes are varied. Software/data engineering, drawing, writing. Expect a similar wide range in the future as well.

The Nature of Infinity, and Beyond – Cantor’s Paradise

A short tour through the life of Georg Cantor and his quest for proving the continuum hypothesis. In the end, he was vindicated.Statistical rule of three

What is a decent estimate of something that hasn't happened yet? Find the answer here.Apache Arrow: use of jemalloc

A short technical post detailing why Arrow moved to jemalloc for memory allocation.Subpixel Text Encoding

This is... unexpected. A font that is 1 pixel wide.Solving murder with Prolog

I have always been a fan of Prolog, and this is a fun and understandable example if you have never used it.What Parkour Classes Teach Older People About Falling

Interesting. I'm still young, but I'll keep this in mind for the future.Implementing VisiCalc

The detailed story about how VisiCalc (the first spreadsheet) was written.The military secret to falling asleep in two minutes

I was actually doing something similar since I was like 12. It might be a stretch to say 2 minutes, but works.Index 1,600,000,000 Keys with Automata and Rust

Super interesting (and long) post about how FSA and FST are used for fast search in Rust (I'm a bit into Rust lately). Also, BurntSushi's (Andrew Gallant, the author) cat is called Cauchy, something I appreciate as my cat is named Fatou.How to Draw from Imagination: Beyond References

An excellent piece on gesture drawing and improving your technique.Anatomy of a Scala quirk

All languages have their WAT, it's harder to find them in Scala though.Chaotic attractor reconstruction

An easy example in Python of Takens' embedding theoremHello, declarative world

An exploration between imperative and functional, and how declarative fits the landscapePython with Context Managers

Although I have written tons of Python, I never took the time to either write or understand how context managers work. This one was good.Raymond Chandler's Ten Commandments For the Detective Novel

You never know when you may write a detective novel. Ruben and the case of the dead executorSeven steps to 100x faster

An optimisation tour of a piece of code written in Go, from data structures to allocation pressure.Writing a Faster Jsonnet Compiler

A semi-technical post by Databricks about Jsonnet and why they wrote their own compiler. Serves as an introduction to Jsonnet ("compilable JSON") as well.Bonus

Monoid font and Poet emacs themeToday I switched from solarized dark and Fira Code Pro to the above. It looks interesting30/04/2016 - Ruben Berenguel, PhD

Started a long time ago. It was supposed to be about a phenomenon leading to chaos: separatrix splitting. I got a research grant. I worked on holomorphic dynamics. Travelled. Presented. Too many roadblocks with the separatrix problem. Switched topics. Welcome to a different new world, infinite dimensional dynamical systems. I read the literature. Researched, proved some things. My grant ran out. I worked. A lot. Too many times I considered giving up. Kept thinking of the sunk cost fallacy. My advisor and my girlfriend helped me keep at it. I pushed on.

All this happened in early February, and since then many more things have happened. Right now I'm working part in London and part at home as a mix of software and data engineer, with a dash of devops to make it more spicy. Truly a jack of all trades (but I specially like the data science part).